Dimesionality Analysis - Machine Learning

Feature Selection

- Eliminate feature based on analysis

- Selecting subset of relevant features

- Types

- Filter Methods

- Basic Filter Method

- Constant Features

- Quasi Constant Features

- Duplicate Features

- Correlation Filter Methods => Change in target attribute as compared to change in values

- Pearson Correlation Coefficient

- Spearman's Rank Corr Coef

- Kendall's Rank Corr Coef

- Statistical & Ranking Filter Methods

- Mutual Information

- Chi Square Score

- ANOVA Univariate

- Univariate ROC-AUC / RMSE

- Wrapper Methods

- Search Methods

- Forward Feature Selection

- Backward Feature Elimination

- Exhaustive Feature Selection

- Sequential Floating

- Step Floating Forward Selection

- Step Floating Backward Selection

- Other Search

- Embedded Methods

- Regularization

- Tree Based Importance

- Hybrid Method

- Filter & Wrapper Methods

- Embedded & Wrapper Methods

- Recursive Feature Elimination

- Recursive Feature Addition

- Used when original raw data cannot be used and needed to be transformed into desired form

- Texts, Images, Geospatial data, Date and Time, Web data, Sensors data

- Creating new or smaller set of features that captures most of the useful information of the raw data

- Combine two or more features

- Types

- Dimensionality Reduction

- Principle Component Analysis (PCA)

- Solves Overfitting problem

- Steps to find PC

- Find Principle components from different Views

- Number of Principle components ≤ Number of attributes

- PC1 is given higher priority over PC2

- PC1 and PC2 should hold Orthogonal property => Independent of each other

- Steps to solve problem

- X & Y are given > Find mean of both

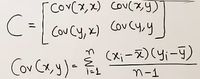

- Find Co-variance matrix (C) of size 2 (based on number of values given)

- Find eigen values by finding roots if equation (C - λI = 0)

- Find eigen vector (V) corresponding to each eigen values using equation (CV = λV)

- Put value of one equal to 1 and find another to get a temporary eigen vector

- Find square root of sum of both values and divide both values by this obtained number to get the final eigen vector

- Independent Component Analysis (ICA)

- Linear Discriminant Analysis (LDA)

- Locally Linear Embedding (LLE)

- t-distributed Stochastic Neighbor Embedding (t-SNE)

- Heuristic Search Algorithms

- Feature Importance

- Deep Learning

- Factor Analysis

- Single Value Decomposition