Data - Machine Learning

- How much data is required?

- If there are "X" features than data should be at least (10*X)

- For Dimensionality reduction & Clustering algorithms, less than 10,000 data will work efficiently

- For Regression analysis & Classification algorithms, less than 100,000 data will work efficiently

- For neural network, more than 100,000 data will be required

- Data Types

- Structured

- In form of tables

- Contains Rows/Case/Observation, Columns/Variables/Dimensions/Attributes

- Unstructured

- Contains Images, Videos, Audios, Messages

- Big Data

- Very huge number of data

- Structured

- Training & Testing Data

- Select Dependent & Independent variables after preprocessing data > Split data

- Select the target Attribute > Divide the data into 2 parts

- Use first part to train the model and Second part to test the model

- Check the error margin by comparing the obtained output with the actual output

- Code

from sklearn.model_selection import train_test_split X = data[["val1", "val2"]] # Independent variables y = data["val3"] # Dependent variables X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=N) # Splitting data - Random State

- Default value is "None"

- Generates same samples for a particular value of "N"

- Select Dependent & Independent variables after preprocessing data > Split data

- Managing Missing Features/Values/Attributes

- Remove the Record

- Create Sub-Model

- Automatic Strategy

- Mean

- Median

- Mode

- Managing Categorical Data

- One Hot Encoding Scheme

- Number of Labels of Categorical feature = N

- Size of Vector (Number of Binary features) = N

- Encode a value by Activating (Set Bit) for that value and Deactivating (Unset Bit) for others

- Dummy Coding Scheme

- Size of Vector (Number of Binary features) = N - 1

- Eliminate first or last features and put "0" in all for that value

- Effect Coding Scheme

- Size of Vector (Number of Binary features) = N - 1

- Eliminate first or last features and put "-1" in all for that value

- One Hot Encoding Scheme

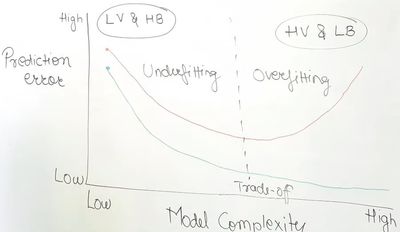

- Bias & Variance

- Bias refers to the gap in between the predicted value and the actual value in the data

- Variance refers to how much scattered predicted values are w.r.t each other

- Bias-Variance Trade-Off

- Red represents test data and Green represents training data

- Time Series

- Level

- Average of data

- Trend

- Uptrend => Increasing with time

- Downtrend => Decreasing with time

- Seasonality

- Patterns repeating over a period of time

- Cyclic Pattern

- Patterns repeating over a large period of time

- Noise

- Data value with no pattern

- Level

- Feature Engineering

- Domain Knowledge => Can verify data

- Visualization => Check for errors visually

- Sampling

- Selecting a small sample out of the population

- Population is Complete set of data

- Types

- Random

- Randomly selecting a data from population for creating a sample

- Systematic

- Selecting every "Nth" data from population for creating a sample

- Stratified

- Stratum

- Subset of the population in which every value is sharing some common characteristics

- Stratum

- Random

- Selecting a small sample out of the population

- Estimate

- Point

- Getting a single value from the sample population like mean, median

- Interval

- Getting range of values from the sample population

- Accuracy is better than point estimate

- Point

- Performance Measure (Loss Functions) => Gives direction of Optimal solution

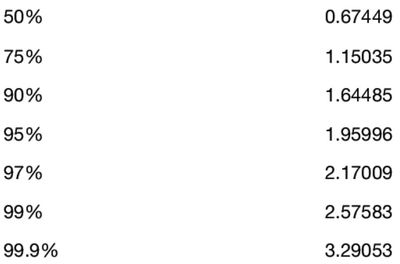

- Confidence Interval (CI)

- Confidence level that target values is present in given Interval

- Interval = x̄ ± Z * σ/√n

- Mean of sample will lie in this interval with given confidence

- "σ" is taken of entire population

- If not given then take "σ" of sample if sample contains more than 30 records (n > 30)

- Margin of Error (MOE) = Z * σ/√n

- Error in finding the confidence interval

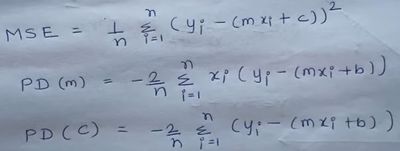

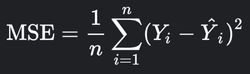

- Mean Squared Error (MSE) = Cost Function = l1 Loss

- Sum of error between actual value and predicted value

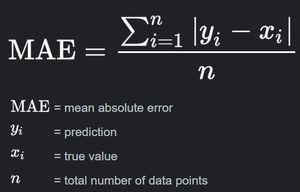

- Mean Absolute Error (MAE) = l2 Loss

- R Squared (R2) = Coefficient of Determination (COD) = 1 - RSS/TSS

- Closeness of regression line (Best fit) to actual values

- RSS (Residual Sum of Squares) = Sum of Squares Error (SSE) = (ΣObserved Value - Mean)2

- TSS (Total sum of squares) = (ΣActual Value - Mean)2

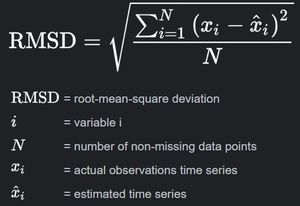

- Root Mean Square Error (RMSE)

- Confidence Interval (CI)

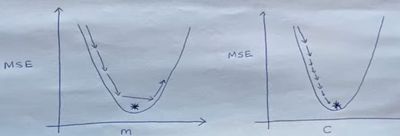

- Gradient Descent

- Values of "m" & "c" in y = mx + c for which line is best fit (MSE/Cost is lowest)

- Find global minima by using variable length

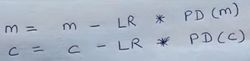

- Learning Rate (LR)

- Generally taken between 0 to 0.1

- To find value of "m" & "c"

- Wnew = Wold - LR * PD

- Stochastic Gradient Descent

- Considering 1 data point for updating weight when doing PD

- Used in linear regression

- Mini Batch Gradient Descent

- Considering more than 1 but less than "n" data point for updating weight

- Used in ANN