N N Models - Artificial Intelligence

Artificial Neural Network (ANN)

- Used with structured data like tabular form

- Types

- Single

- Multilayer Feed Forward

- Recurrent

- Encoding Techniques => Used to represent categorical data in a numerical format that can be used as input for training

- One-Hot Encoding

- Label Encoding

- Binary Encoding

- Manual Encoding

- Steps

- Import libraries and Datasets

- Data Exploration and Analysis

- Remove Null Values

- Data Preprocessing

- Data Encoding

- Data Splitting

- Build Model => Regression, Classification

- Initialize => Sequential

- Add layers => Dense

- Optimizer, Loss function

- Compile & Train model

- Test & Compare model

- Predict

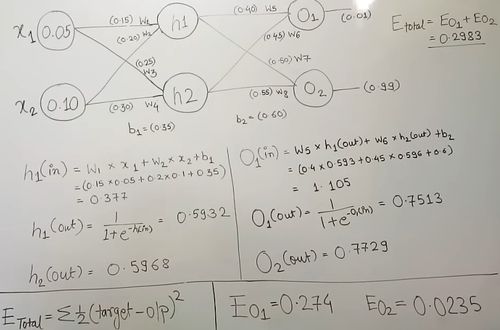

- Feed Forward Neural Network (FNN)

- Input Layer > Hidden Layer > Output Layer

- Accuracy increases with increase in Hidden layer

- Heaviside step activation function is generally used in Output Layer

- Considers only current input state, Memory is not used

- No loop is formed, Data only moves forward

- Can not deal with sequential data

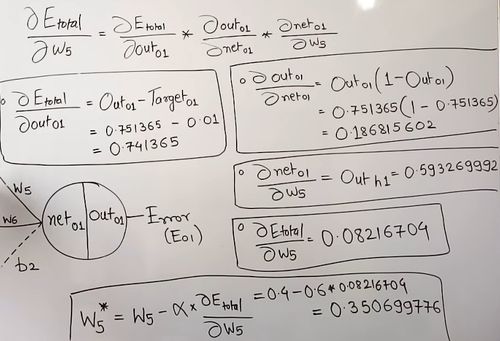

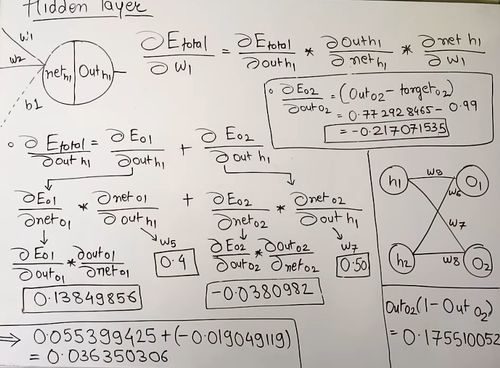

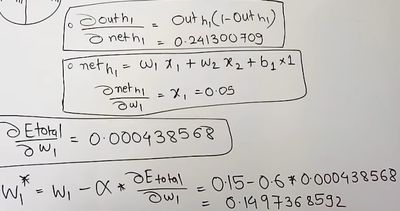

- Back Propagation Algorithm (Backward Propagation of Error)

- Goal is to reach target value

- Reduce the error factor

- Goal is to reach target value

- Feedback Network

- Allows loop & feedback

- Recurrent/Recursive network

- Very complex to implement

- Input Layer > Hidden Layer > Output Layer

- Sentiment analysis

- Text Preprocessing

- Remove the special characters

- Convert entire text to upper or lower case

- Split the data

- Remove stopwords

- Stemming / Lematization

- Join the words

- Vectorization

- Convert text to number

- Build Model

- Text Preprocessing

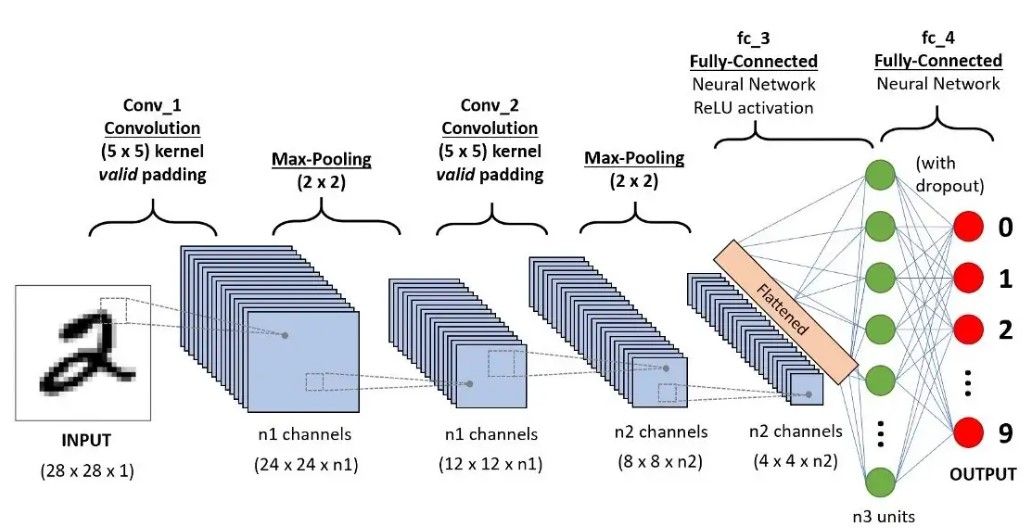

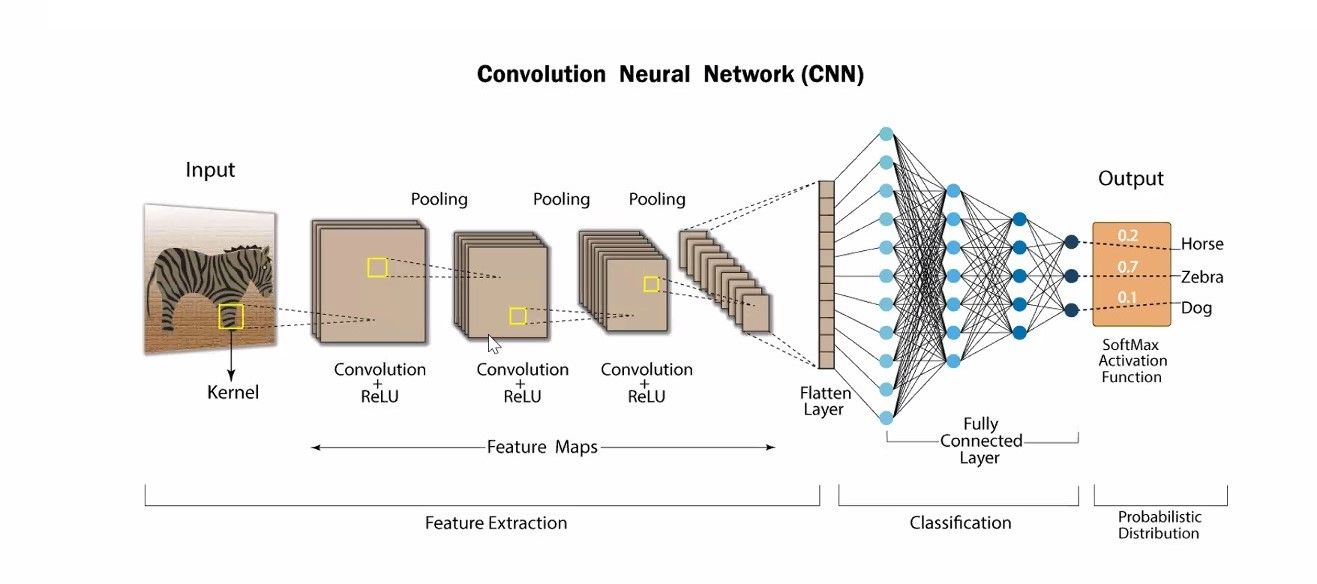

Convolution Neural Network (CNN)

- Accepts input in form of image

- Deep Learning algorithm that can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image, and be able to differentiate one from the other

- Layers

- Convolution => Learn to extract local patterns and features from the input data

- Kernal/Filters > Feature Map

- Pooling => Partition the input into non-overlapping regions and keep only the maximum value from each region

- Flattening => Convert multi-dimensional input data into a one-dimensional vector

- Fully Connected Layer (Dense layer) => Applies a linear transformation to the input followed by a non-linear activation function

- Convolution => Learn to extract local patterns and features from the input data

- Steps

- Import libraries and Datasets

- Data Augmentation => ImageDataGenerator

- Data Preprocessing

- Normalize

- Build Model => Regression, Classification

- Initialize => Sequential

- Add layers => Dense, Convolutional

- Compile & Train

- Test & Compare model & Predict

- Import libraries and Datasets

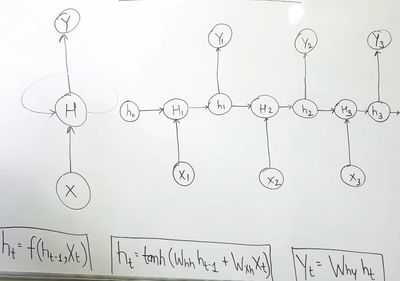

Recurrent Neural Network (RNN)

- Memory is used that stores past input states, Considers previous state

- Output of Hidden layer serves as the input for next timestep (Xi)

- Initial hidden state (ho) is considered equal to "0" given as input along with Current input state (X1) to generate current hidden state (h1) which used to generate output (Yi)

- h1 is given as input to next step as previous hidden step

- ht = ActivationFunction(WeightPreviousHiddenStep * ht-1 + WeightCurrentHiddenStep * Xt)

- Yt = WeightOutputStep * ht

- LSDM

- Steps

- Import libraries and Datasets

- Data Exploration and Analysis

- Remove Null Values

- Data Preprocessing

- Normalization

- Data Splitting

- Build Model => Regression, Classification

- Initialize => Sequential

- Add layers => Dense

- Optimizer, Loss function

- Compile & Train model

- Test & Compare model

- Predict

- Steps